Lucet

A Bytecode Alliance project

Lucet is a native WebAssembly compiler and runtime. It is designed to safely execute untrusted WebAssembly programs inside your application.

Check out our announcement post on the Fastly blog.

Lucet uses, and is developed in collaboration with, the Bytecode Alliance's Cranelift code generator. It powers Fastly's Terrarium platform.

Lucet's documentation is available at https://bytecodealliance.github.io/lucet (sources).

Getting started

To learn how to set up the toolchain, and then compile and run your first WebAssembly application using Lucet, see Getting started.

Development environment

Lucet is developed and tested on x86-64 Linux, with experimental support for macOS. For compilation instructions, see Compiling.

Supported languages and platforms

Lucet supports running WebAssembly programs written in C and C++ (via clang), Rust, and

AssemblyScript. It does not yet support the entire WebAssembly spec, but full support is

planned.

Lucet's runtime currently supports x86-64 based Linux systems, with experimental support for macOS.

Security

The Lucet project aims to provide support for secure execution of untrusted code. Security is achieved through a combination of Lucet-supplied security controls and user-supplied security controls. See Security for more information on the Lucet security model.

Reporting Security Issues

The Lucet project team welcomes security reports and is committed to providing prompt attention to security issues. Security issues should be reported privately via Fastly’s security issue reporting process. Remediation of security vulnerabilities is prioritized. The project teams endeavors to coordinate remediation with third-party stakeholders, and is committed to transparency in the disclosure process.

Using Lucet

To get started using Lucet, you will need to compile and install Lucet.

Once the Lucet command-line tools are available, you can run a Lucet "Hello World"

application, or use the lucet-runtime

API to embed a Lucet program in your Rust application.

Compiling Lucet from scratch

Specific instructions are available for some flavors of Linux and for macOS (experimental).

If you are using another platform, or if the provided instructions are not working, it may be

helpful to try adapting the setup code in the Dockerfile that defines the Lucet continuous

integration environment. While the image is defined in terms of Ubuntu 18.04, many of the

packages are available through other package managers and operating systems.

Compiling on Linux

We successfully compiled Lucet on Arch Linux, Fedora, Gentoo and Ubuntu. Only x86_64 CPUs are supported at this time.

Option 1: installation on Ubuntu, with a sidecar installation of LLVM/clang

The following instructions only work on Ubuntu. They install a recent version of LLVM and clang

(in /opt/wasi-sdk), so that WebAssembly code can be compiled on Ubuntu versions older than 19.04.

First, the curl and cmake packages must be installed:

apt install curl ca-certificates cmake

You will need to install wasi-sdk as well. Note that you may need to run dpkg with elevated

privileges to install the package.

curl -sS -L -O https://github.com/WebAssembly/wasi-sdk/releases/download/wasi-sdk-10/wasi-sdk_10.0_amd64.deb \

&& dpkg -i wasi-sdk_10.0_amd64.deb && rm -f wasi-sdk_10.0_amd64.deb

Install the latest stable version of the Rust compiler:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

source $HOME/.cargo/env

Enter your clone of the Lucet repository, and then fetch/update the submodules:

cd lucet

git submodule update --init --recursive

Finally, compile the toolchain:

make install

In order to use clang to compile WebAssembly code, you need to adjust your PATH to use tools

from /opt/wasi-sdk/bin instead of the system compiler. Or use set of commands prefixed by

wasm-wasi-, such as wasm32-wasi-clang instead of clang.

Option 2: installation on a recent Linux system, using the base compiler

Support for WebAssembly was introduced in LLVM 8, released in March 2019.

As a result, Lucet can be compiled with an existing LLVM installation, provided that it is up to date. Most distributions now include LLVM >= 8, so that an additional installation is not required to compile to WebAssembly .

On distributions such as Ubuntu (19.04 or newer) and Debian (bullseye or newer), the following command installs the prerequisites:

apt install curl ca-certificates clang lld cmake

On Arch Linux:

pacman -S curl clang lld cmake

Next, install the WebAssembly compiler builtins:

curl -sL https://github.com/WebAssembly/wasi-sdk/releases/download/wasi-sdk-10/libclang_rt.builtins-wasm32-wasi-10.0.tar.gz | \

sudo tar x -zf - -C /usr/lib/llvm-*/lib/clang/*

Install the latest stable version of the Rust compiler:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

source $HOME/.cargo/env

Install the WASI sysroot:

mkdir -p /opt

curl -L https://github.com/WebAssembly/wasi-sdk/releases/download/wasi-sdk-10/wasi-sdk-10.0-linux.tar.gz | \

sudo tar x -zv -C /opt -f - wasi-sdk-10.0/share && \

sudo ln -s /opt/wasi-sdk-*/share/wasi-sysroot /opt/wasi-sysroot

Enter your clone of the Lucet repository, and then fetch/update the submodules:

cd lucet

git submodule update --init --recursive

Set the LLVM path:

export LLVM_BIN=/usr/lib/llvm-*/bin

Finally, install the Lucet toolchain with:

make install

and update your shell's environment variables:

source /opt/lucet/bin/setenv.sh

You may want to add these environment variables to your shell's configuration.

The standard system compiler can be used to compile to WebAssembly, simply by adding

--host=wasm32-wasi to the compilation flags.

Compiling on macOS

Prerequisites

Install llvm, rust and cmake using Homebrew:

brew install llvm rust cmake

In order to compile applications written in C to WebAssembly, clang builtins need to be installed:

curl -sL https://github.com/WebAssembly/wasi-sdk/releases/download/wasi-sdk-10/libclang_rt.builtins-wasm32-wasi-10.0.tar.gz | \

tar x -zf - -C /usr/local/opt/llvm/lib/clang/10*

As well as the WASI sysroot:

sudo mkdir -p /opt

curl -sS -L https://github.com/WebAssembly/wasi-sdk/releases/download/wasi-sdk-10/wasi-sysroot-10.0.tar.gz | \

sudo tar x -zf - -C /opt

Compiling and installing Lucet

Enter the Lucet git repository clone, and fetch/update the submodules:

cd lucet

git submodule update --init

Define the location of the WASI sysroot installation:

export WASI_SYSROOT=/opt/wasi-sysroot

Finally, compile and install the toolchain:

env LUCET_PREFIX=/opt/lucet make install

Change LUCET_PREFIX to the directory you would like to install Lucet into. /opt/lucet is the default directory.

Setting up the environment

In order to add /opt/lucet to the command search path, as well register the library path for the Lucet runtime, the following command can be run interactively or added to the shell startup files:

source /opt/lucet/bin/setenv.sh

Running the test suite

If you want to run the test suite, and in addition to WASI_SYSROOT, the following environment variables must be set:

export CLANG_ROOT="$(echo /usr/local/opt/llvm/lib/clang/10*)"

export CLANG=/usr/local/opt/llvm/bin/clang

And the test suite can then be run with the following command:

make test

Your first Lucet application

Ensure the Lucet command-line tools are installed on your machine

Create a new work directory in the lucet directory:

mkdir -p src/hello

cd src/hello

Save the following C source code as hello.c:

#include <stdio.h>

int main(void)

{

puts("Hello world");

return 0;

}

Time to compile to WebAssembly! The development environment includes a version of the Clang

toolchain that is built to generate WebAssembly by default. The related commands are accessible from

your current shell, and are prefixed by wasm32-wasi-.

For example, to create a WebAssembly module hello.wasm from hello.c:

wasm32-wasi-clang -Ofast -o hello.wasm hello.c

The next step is to use Lucet to build native x86_64 code from that WebAssembly file:

lucetc-wasi -o hello.so hello.wasm

lucetc is the WebAssembly to native code compiler. The lucetc-wasi command runs the same

compiler, but automatically configures it to target WASI.

hello.so is created and ready to be run:

lucet-wasi hello.so

Example usage of the lucet-runtime crate.

The following Rust code loads a WebAssembly module compiled using lucetc-wasi and calls its

main() function.

.cargo/config:

These flags must be set in order to have symbols from the runtime properly exported.

[build]

rustflags = ["-C", "link-args=-rdynamic"]

Cargo.toml:

[package]

name = "lucet-runtime-example"

version = "0.1.0"

edition = "2018"

[dependencies]

lucet-runtime = { path = "../../lucet-runtime" }

lucet-wasi = { path = "../../lucet-wasi" }

# or, if compiling against released crates.io versions:

# lucet-runtime = "0.5.1"

# lucet-wasi = "0.5.1"

src/main.rs:

use lucet_runtime::{DlModule, Limits, MmapRegion, Region}; use lucet_wasi::WasiCtxBuilder; fn main() { // ensure the WASI symbols are exported from the final executable lucet_wasi::export_wasi_funcs(); // load the compiled Lucet module let dl_module = DlModule::load("example.so").unwrap(); // create a new memory region with default limits on heap and stack size let region = MmapRegion::create(1, &Limits::default()).unwrap(); // instantiate the module in the memory region let mut instance = region.new_instance(dl_module).unwrap(); // prepare the WASI context, inheriting stdio handles from the host executable let wasi_ctx = WasiCtxBuilder::new().inherit_stdio().build().unwrap(); instance.insert_embed_ctx(wasi_ctx); // run the WASI main function instance.run("main", &[]).unwrap(); }

End-to-end integrity and authentication of Lucet assets

Lucet tools have the ability to verify digital signatures of their input, and produce signed output, ensuring continuous trust even if assets have to transit over unsecured networks.

lucetccan be configured to only compile source code (.wasm,.watfiles) if a signature is present, and can be verified using a pre-configured public key.- Shared libraries produced by the

lucetccompiler can themselves embed a signature, computed using the same secret key as the source code, or a different key. - The

lucet-wasiruntime can accept to run native code fromlucetconly if it embeds a valid signature for a pre-configured public key.

Secret keys can be protected by a password for interactive use, or be password-less for automation.

Minisign is the highly secure signature system used in Lucet via the minisign crate. Key pairs and signatures are fully compatible with other implementations.

Lucetc signature verification

Source files (.wasm, .wat) can be signed with minisign, rsign2, or another Minisign implementation.

The Lucet container ships with rsign2 preinstalled.

Creating a new key pair

rsign generate

Please enter a password to protect the secret key.

Password:

Password (one more time):

Deriving a key from the password in order to encrypt the secret key... done

The secret key was saved as /Users/j/.rsign/rsign.key - Keep it secret!

The public key was saved as rsign.pub - That one can be public.

Files signed using this key pair can be verified with the following command:

rsign verify <file> -P RWRJwC2NawX3xnBK6mvAAehmFWQ6Z1PLXoyIz78LYkLsklDdaeHEcAU5

Signing a WebAssembly input file

rsign sign example.wasm

Password:

Deriving a key from the password and decrypting the secret key... done

The resulting signature is stored into a file with the same name as the file having been signed, with a .minisig suffix (in the example above: example.wasm.minisig).

Configuring lucetc to verify signatures

Source files can be verified by adding the following command-line switches to lucetc.

--signature-verify

--signature-pk=<path to the public key file>

lucetc assumes that a source file and its signature are in the same directory.

Compilation will only start if the signature is valid for the given public key.

Producing signed shared objects

Shared libraries produced by the lucetc compiler can embed a signature.

Creating a key pair

This requires a secret key, that can be either created using a 3rd party minisign implementation, or by lucetc itself:

lucetc --signature-keygen \

--signature-sk <file to store the secret key into> \

--signature-pk <file to store the public key into>

By default, secret keys are protected by a password. If this is inconvenient, lucetc also supports raw, unencrypted secret keys.

In order to use raw keys, add a raw: prefix before the file name (ex: --signature-sk=raw:/opt/etc/lucet.key).

Signing shared objects produced by lucetc

In order to embed a signature in a shared object produced by lucetc or lucetc-wasi, the following command-line switches should be present:

--signature-create

--signature-sk <path to the secret key file>

If the secret key was encrypted with a password, the password will be asked interactively.

Signatures are directly stored in the .so or .dylib files.

Key pairs used for source verification and for signing compiled objects can be different, and both operations are optional.

Signature verification in the Lucet runtime

lucet-wasi can be configured to run only trusted native code, that includes a valid signature for a pre-configured key. In order to do so, the following command-line switches have to be present:

--signature-verify

--signature-pk <path to the public key file>

Lucet components

-

lucetc: the Lucet Compiler. -

lucet-runtime: the runtime for WebAssembly modules compiled throughlucetc. -

lucet-wasi: runtime support for the WebAssembly System Interface (WASI). -

lucet-objdump: an executable for inspecting the contents of a shared object generated bylucetc. -

lucet-spectest: a driver for running the official WebAssembly spec test suite under Lucet. -

lucet-wasi-sdk: convenient wrappers around the WASI Clang toolchain andlucetc. -

lucet-module: data structure definitions and serialization functions that we emit into shared objects withlucetc, and read withlucet-runtime.

lucetc

lucetc is the Lucet Compiler.

The Rust crate lucetc provides an executable lucetc.

It compiles WebAssembly modules (.wasm or .wat files) into native code (.o or .so files).

Example

lucetc example.wasm --output example.so --bindings lucet-wasi/bindings.json --reserved-size 64MiB --opt-level best

This command compiles example.wasm, a WebAssembly module, into a shared library example.so. At

run time, the heap can grow up to 64 MiB.

Lucetc can produce ELF (on Linux) and Mach-O (on macOS) objects and libraries. For debugging purposes or code analysis, it can also dump Cranelift code.

Usage

lucetc [FLAGS] [OPTIONS] [--] [input]

FLAGS:

--count-instructions Instrument the produced binary to count the number of wasm operations the translated

program executes

-h, --help Prints help information

--signature-keygen Create a new key pair

--signature-create Sign the object file

-V, --version Prints version information

--signature-verify Verify the signature of the source file

OPTIONS:

--bindings <bindings>... path to bindings json file

--emit <emit>

type of code to generate (default: so) [possible values: obj, so, clif]

--guard-size <guard_size> size of linear memory guard. must be multiple of 4k. default: 4 MiB

--max-reserved-size <max_reserved_size>

maximum size of usable linear memory region. must be multiple of 4k. default: 4 GiB

--min-reserved-size <min_reserved_size>

minimum size of usable linear memory region. must be multiple of 4k. default: 4 MiB

--opt-level <opt_level>

optimization level (default: 'speed_and_size'). 0 is alias to 'none', 1 to 'speed', 2 to 'speed_and_size'

[possible values: 0, 1, 2, none, speed, speed_and_size]

-o, --output <output> output destination, defaults to a.out if unspecified

--signature-pk <pk_path> Path to the public key to verify the source code signature

--precious <precious> directory to keep intermediate build artifacts in

--reserved-size <reserved_size>

exact size of usable linear memory region, overriding --{min,max}-reserved-size. must be multiple of 4k

--signature-sk <sk_path>

Path to the secret key to sign the object file. The file can be prefixed with "raw:" in order to store a

raw, unencrypted secret key

ARGS:

<input> input file

External symbols

By default, compiled files cannot call any external function. Not even WASI's. Allowed external functions have to be explicitly listed in bindings JSON file, that have the following format:

{

"wasi_unstable": {

"symbol_name_1": "native_symbol_name_1",

"symbol_name_n": "native_symbol_name_n",

}

}

The example above allows the WebAssembly module to refer to an external symbol symbol_name_1, that

maps to the native symbol native_symbol_name_1.

The --bindings command-line switch can be used more than once in order to split the definitions

into multiple files.

When using WASI, the bindings.json file shipped with lucet-wasi can be used in order to import

all the symbols available in the lucet-wasi runtime.

Memory limits

-

--max-reserved-size <size>makes the compiler assume that the heap will never grow more than<size>bytes. The compiler will generate code optimized for that size, inserting bound checks with static values whenever necessary. As a side effect, the module will trap if the limit is ever reached, even if the runtime could allow the heap to grow even further. -

--min-reserved-size <size>sets the maximum heap size the runtime should use. -

--reserved-size <size>is a shortcut to set both values simultaneously, and is the recommended way to configure how much memory the module can use. The default is only 4 MiB, so this is something you may want to increase. -

--guard-size <size>controls how much virtual memory with no read nor write access is reserved after an instance's heap. The compiler can avoid some bound checking when it is safe to do so according to this value.

Optimization levels

-

--opt-level 0makes the compilation as fast as possible, but the resulting code itself may not be optimal. -

--opt-level 1generates fast code, but does not run passes intended to reduce code size. -

--opt-level 2generates the fastest and smallest, but is compilation is about twice as slow as0.

lucet-runtime

lucet-runtime is the runtime for WebAssembly modules compiled through lucetc.

It is a Rust crate that provides the functionality to load modules from shared object files,

instantiate them, and call exported WebAssembly functions. lucet-runtime manages the resources

used by each WebAssembly instance (linear memory & globals), and the exception mechanisms that

detect and recover from illegal operations.

The public API of the library is defined lucet-runtime, but the bulk of the implementation is in

the child crate lucet-runtime-internals. Proc macros are defined in lucet-runtime-macros, and

test suites are defined in the child crate lucet-runtime-tests. Many of these tests invoke

lucetc and the wasi-sdk tools.

lucet-runtime is usable as a Rust crate or as a C library. The C language interface is found at

lucet-runtime/include/lucet.h.

KillSwitch

KillSwitch is a mechanism by which users of lucet-runtime can

asynchronously request, very sternly, that an lucet_runtime::Instance be

disabled from running.

If the instance is currently running, it will be stopped as soon as possible.

If the instance has not yet started running, it will immediately exit with

an error when the lucet embedder attempts to run it.

KillSwitch easily interoperates with Lucet's instance suspend/resume

machinery: suspending an instance is, from the instance's point of view, just a

(possibly very long) hostcall. Termination of a suspended instance behaves like

termination in any other hostcall: witnessed when the instance is resumed and

the "hostcall" exits.

In some circumstances, a KillSwitch may successfully fire to no actual effect

at any point in the program - one such example is termination in a hostcall that

eventually faults; since termination cannot preempt hostcalls, the termination may

never be witnessed if the fault causes the host to never resume the Lucet

instance.

In this chapter we will describe a typical usage of KillSwitch as a mechanism

to enforce execution time limits. Then, we will discuss the implementation

complexities that KillSwitch must address to be correct.

KillSwitch are valid for termination only on the instance call after they are

created, or until an instance is reset. When a call into a guest returns, the

shared state by which KillSwitch signal is replaced, and an attempt to

terminate will fail with an Err.

Example: KillSwitch used as a timeout mechanism

This example is taken from lucet_runtime_tests::timeout::timeout_in_guest:

#![allow(unused)] fn main() { let module = mock_timeout_module(); let region = <TestRegion as RegionCreate>::create(1, &Limits::default()) .expect("region can be created"); let mut inst = region .new_instance(module) .expect("instance can be created"); let kill_switch = inst.kill_switch(); // Spawn a thread to terminate the instance after waiting for 100ms. let t = thread::Builder::new() .name("killswitch".to_owned()) .spawn(move || { thread::sleep(Duration::from_millis(100)); assert_eq!(kill_switch.terminate(), Ok(KillSuccess::Signalled)); }) .expect("can spawn a thread"); // Begin running the instance, which will be terminated remotely by the KillSwitch. match inst.run("infinite_loop", &[]) { Err(Error::RuntimeTerminated(TerminationDetails::Remote)) => { // the result of a guest that was remotely terminated (a KillSwitch at work) } res => panic!("unexpected result: {:?}", res), } // wait for the KillSwitch-firing thread to complete t.join().unwrap(); }

Implementation

As this section discusses implementation details of lucet_runtime, it will

refer to structures and enums that are unexported. For most components of

Lucet's KillSwitch functionality, defintions live in

lucet-runtime-internals/src/instance/execution.rs.

KillState and Domain, around which most of the implementation is centered,

are both defined here and are internal to Lucet. As a result, fully qualified

paths such as instance::execution::Domain may be used below and not have

corresponding entries visible in rustdoc - these items exist in the crate

source though!

As much as is possible, KillSwitch tries to be self-contained; no members are

public, and it tries to avoid leaking details of its state-keeping into public

interfaces. Currently, lucet-runtime is heavily dependent on POSIX

thread-directed signals to implement guest termination. For non-POSIX platforms

alternate implementations may be plausible, but need careful consideration of

the race conditions that can arise from other platform-specific functionality.

KillSwitch fundamentally relies on two pieces of state for safe operation,

which are encapsulated in a KillState held by the Instance it terminates:

execution_domain, aDomainthat describes the kind of execution that is currently happening in theInstance.- This is kept in a

Mutexsince in many cases it will need to be accessed either by aKillSwitchorKillState, both of which must block other users while they are considering the domain.

- This is kept in a

terminable, anAtomicBoolthat indicates if theInstancemay stop executing.terminableis in some ways a subset of the information expressed byDomain. It is true if and only if the instance is not obligated to a specific exit mechanism yet, which could be determined by examing the activeDomainat points we checkterminableinstead. Even though this is duplicative, it is necessary for a correct implementation when POSIX signal handlers are involved, because it is extremely inadvisable to take locks in a signal handler. While aMutexcan be dangerous, we can share anAtomicBoolin signal-safety-constrained code with impunity! See the sectionTimeout while handling a guest faultfor more details on working under this constraint.

The astute observer may notice that Lucet contains both an instance Domain

and an instance State. These discuss different aspects of the instance's

lifecycle: Domain describes what the instance is doing right now, where

State describes the state of the instance as visible outside lucet_runtime.

This is fundamentally the reason why State does not and cannot describe a

"making a hostcall" variant - this is not something lucet_runtime wants to

expose, and at the moment we don't intend to make any commitments about being

able to query instance-internal state like this.

Additionally, this is why Domain::Terminated only expresses that an instance

has stopped running, not if it successfully or unsuccessfully exited. From

Domain's perspective, the instance has stopped running, and that's all there

is to it. The Instance's State will describe how the instance stopped, and

if that's by a return or a fault.

Termination Mechanisms

At a high level,KillSwitch picks one of several mechanisms to terminate an

instance. The mechanism selected depends on the instance's current Domain:

Domain::Guest is likely to be the most common termination form.

lucet_runtime will send a thread-directed SIGARLM to the thread running the

Lucet instance that currently is in guest code.

Domain::Hostcall results in the mechanism with least guarantees:

lucet_runtime can't know if it's safe to signal in arbitrary host-provided

code[1]. Instead, we set the execution domain to Domain::Terminated and wait

for the host code to complete, at which point lucet_runtime will exit the

guest.

Domain::Pending is the easiest domain to stop execution in: we simply update

the execution domain to Cancelled. In enter_guest_region, we see that the

instance is no longer eligible to run and exit before handing control to the

guest.

Other variants of Domain imply an instance state where there is no possible

termination mechanism. Domain::Terminated indicates that the instance has

already been terminated, and Domain::Cancelled indicates that the instance

has already been preemptively stopped.

Guest Signalling

There are two other pieces of information attached to KillState that support

the specific case where we need to send a SIGALRM: the thread ID we need to

signal, and a Condvar we can wait on to know when the instance has

been stopped.

The thread ID is necessary because we don't record where the instance is

running anywhere else. We keep it here because, so far, KillState is the only

place we actually need to care. Condvar allows lucet_runtime to avoid a

spin loop while waiting for signal handling machinery to actually terminate an

Instance in Domain::Guest.

Lifecycle

Having described both KillState and the termination mechanisms it helps

select, we can discuss the actual lifecycle of the KillState for a call into

an Instance and see how these pieces begin to fit together.

Because KillState only describes one call into an instance, an Instance may

have many KillState over its lifetime. Even so, at one specific time there

is only one KillState, which describes the current, or imminent, call into

the instance.

Execution of a Lucet Instance begins with a default KillState: in the

Pending domain, terminable set to true, with no thread_id as it is not

currently running. When a call is made to Instance::run, the Instance's

bootstrap context is set up and KillState::schedule is called to set up

last-minute state before the instance begins running.

lucet_runtime shortly thereafter will switch to the Instance and begin

executing its bootstrapping code. In enter_guest_region the guest will lock

and update the execution domain to Guest, or see the instance is

Domain::Cancelled and exit. If the instance could run, we proceed into the

AOT-compiled Wasm.

At some point, the instance will likely make a hostcall to embedder-provided or

lucet_runtime-provided code; correctly-implemented hostcalls are wrapped in

begin_hostcall and end_hostcall to take care of runtime bookkeeping,

including updating execution_domain to Domain::Hostcall (begin_hostcall)

and afterwards checking that the instance can return to guest code, setting

execution_domain back to Domain::Guest (end_hostcall).

At some point the instance will hopefully exit, where in

lucet_context_backstop we check that the instance may stop exiting

(KillState::terminable). If it can exit, then do so. Finally, back in

lucet_runtime, we can KillState::deschedule to tear down the last of the

run-specific state - the thread_id.

Implementation Complexities (When You Have A Scheduler Full Of Demons)

Many devils live in the details. The rest of this chapter will discuss the numerous edge cases and implementation concerns that Lucet's asynchronous signal implementation must consider, and arguments for its correctness in the face of these.

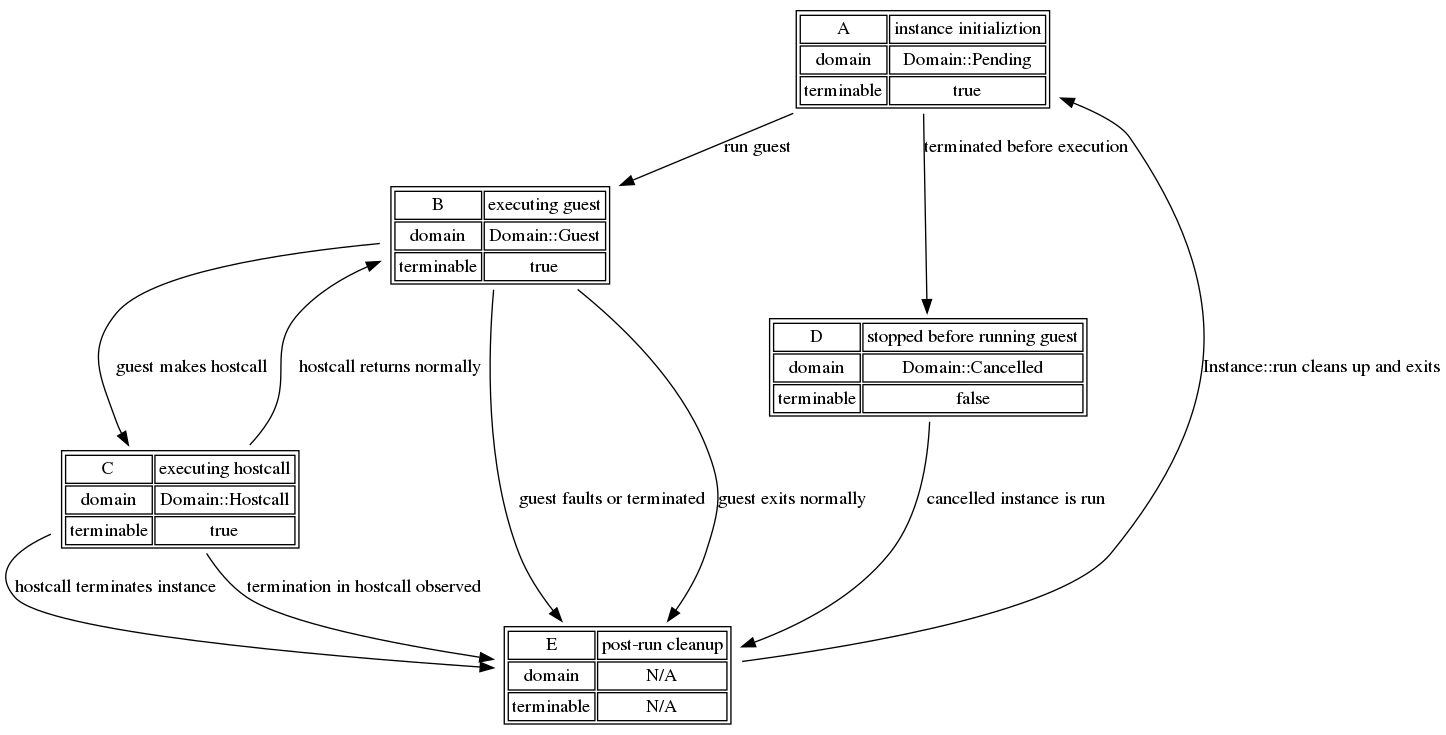

First, a flow chart of the various states and their transitions:

This graph describes the various states that reflect values of

execution_domain and terminable for those states, with edges describing

transitions between domains including termination in non-race scenarios. The

rest of this section discusses the correctness of termination at boundaries

where these state transitions occur.

For reference later, the possible state transitions are:

A -> B(instance runs guest code)A -> D(KillSwitch fires before instance runs)B -> C(guest makes a hostcall)B -> E(normal guest exit)B -> E(from a guest fault/termination)C -> B(hostcall returns to guest)C -> E(hostcall terminates instance)C -> E(hostcall observes termination)- not an internal state but we will also discuss termination during a hostcall fault

D -> E(cancelled guest is run)

These will be covered in rough order of complexity, starting with the simplest

cases and ending with the race which has shown itself to have the most corners.

Races involving Domain::Guest tend to be trickiest, and consequently are

further down.

A -> D - Termination before instance runs

This is a timeout while another KillSwitch has already fired, timing out a

guest before exeuction. Because another KillSwitch must have fired for there

to be a race, one of the two will acquire terminable and actually update

execution_domain, while the other simply exits.

D -> E - Termination when cancelled guest is run

Terminating a previously cancelled guest will have no effect - termination must

have occurred already, so the KillSwitch that fired will not acquire

terminable, and will return without ceremony.

C -> B - Termination when hostcall returns to guest

The case of a KillSwitch firing while exiting from a hostcall is very similar

to termination while entering a hostcall.

The KillSwitch might observe a guest in Domain::Guest, prohibit a state

change, and signal the guest. Alternatively, the KillSwitch can observe the

guest in Domain::Hostcall and update the guest to Domain::Terminated. In

the latter case, the guest will be free to run when the KillSwitch returns,

at which point it will have the same behavior as termination in any

hostcall.

C -> E - Termination during hostcall terminating instance

The KillSwitch that fires acquires terminable and then attempts to acquire

a lock on execution_domain. The KillSwitch will see Domain::Hostcall, and

will update to Domain::Terminated. The shared KillState will be not used by

lucet_runtime again in the future, because after returning to the host it

will be replaced by a new KillState.

C -> E - Termination repeatedly during hostcall

Termination while a hostcall is already observing an earlier termination will

have no effect. The KillSwitch that fired last will not acquire

terminable, and will return without ceremony.

B -> C - Termination when guest makes a hostcall

If a KillSwitch fires during a transition from guest (B) to hostcall (C) code,

there are two circumstances also contingent on whether the instance has

switched to Domain::Hostcall.

Before switching to Domain::Hostcall

The KillSwitch that fires locks execution_domain and sees the execution

domain is Domain::Guest. It then uses typical guest termination macinery and

signals the guest. An important correctness subtlety here is that KillSwitch

holds the execution_domain lock until the guest is terminated, so the guest

cannot simultaneously proceed into hostcall code and receieve a stray

SIGALRM.

After switching to Domain::Hostcall

The KillSwitch that fires acquires terminable and then attempts to acquire

a lock on execution_domain. Because the instance is switching to or has

switched to Domain::Hostcall, the KillSwitch will select the termination

style for hostcalls. It will update the execution domain to

Domain::Terminated and the instance will return when the hostcall exits and

the Terminated domain is observed.

A -> B - Termination while entering guest code

If a KillSwitch fires between instance initialization (A) and the start of

guest execution (B), there are two circumstances to consider: does the

termination occur before or after the Lucet instance has switched to

Domain::Guest?

Before switching to Domain::Guest

The KillSwitch that fires acquires terminable and then locks

execution_domain to determine the appropriate termination mechanism. This is

before the instance has locked it in enter_guest_region, so it will acquire

the lock, with a state of Domain::Pending. Seeing a pending instance, the

KillSwitch will update it to Domain::Cancelled and release the lock, at

which point the instance will acquire the lock, observe the instance is

Cancelled, and return to the host without executing any guest code.

After switching to Domain::Guest

The KillSwitch that fires acquires terminable and then attempts to acquire

a lock on execution_domain to determine the appropriate termination mechanism.

Because the instance has already locked execution_domain to update it to

Domain::Guest, this blocks until the instance releases the lock (and guest

code is running). At this point, the instance is running guest code and it is

safe for the KillSwitch to operate as if it were terminating any other guest

code - with the same machinery as an instance in state B (a SIGALRM).

B -> E - Termination during normal guest exit

The KillSwitch that fires attempts to acquire terminable, but is in a race

with the teardown in lucet_context_backstop. Both functions attempt to swap

false into terminable, but only one will see true out of it. This acts as

an indicator for which function may continue, where the other may have to take

special care as to not leave the instance in a state that would be dangerous to

signal.

Guest acquires terminable

The guest exits in the next handful of instructions. The KillSwitch that

failed to acquire the true in terminable exits with an indication that it

could not terminate the guest. In this circunstance, we are certain that there

is a non-conditional and short path out of the guest comprising a return from

exit_guest_region and a context swap back to the host code. Timeouts

"failing" due to this are only failing because the guest is about to exit, and

a signal would have no interesting additional benefit.

KillSwitch acquires terminable

In a more unfortunate circumstance, the KillSwitch is what observed the

true out of terminable. In this case, the guest observed false and must

not proceed, so that whenever an imminent SIGALRM from the corresponding

KillSwitch arrives, it will be in a guaranteed-to-be-safe spin loop, or on

its way there with only signal-safe state.

The KillSwitch itself will signal the guest as any other Domain::Guest

interruption.

B -> E - Termination during guest fault, or terminated twice

In this sub-section we assume that the Lucet signal handler is being used, and

will discuss the properties KillSwitch requires from any signal handler for

correctness.

The KillSwitch that fires attempts to acquire terminable. Because a guest

fault or termination has occurred, the guest is actually in

lucet_runtime_internals::instance::signals::handle_signal. If termination

occurs while the guest is already responding to a previous KillSwitch's

termination, the second KillSwitch will see terminable of false and

quickly exit. Otherwise, terminable is true and we have to handle...

Terminated while handling a guest fault

In the case that a KillSwitch fires during a guest fault, the KillSwitch may

acquire terminable. POSIX signal handlers are highly constrained, see man 7 signal-safety for details. The functional constraint imposed on signal

handlers used with Lucet is that they may not lock on KillState's

execution_domain.

As a consequence, a KillSwitch may fire during the handling of a guest fault.

sigaction must mask SIGALRM so that a signal fired before the handler exits

does not preempt the handler. If the signal behavior is to continue without

effect, leave termination in place and continue to the guest. A pending SIGALRM

will be raised at this point and the instance will exit. Otherwise, the signal

handler has determined it must return to the host, and must be sensitive to a

possible in-flight KillSwitch...

Instance-stopping guest fault with concurrent KillSwitch

In this case we must consider three constraints:

- A

KillSwitchmay fire and deliver aSIGALRMat any point - A

SIGALRMmay already have been fired, pending on the handler returning - The signal handler must return to host code

First, we handle the risk of a KillSwitch firing: disable termination. If we

acquire terminable, we know this is to the exclusion of any KillSwitch, and

are safe to return. Otherwise, some KillSwitch has terminated, or is in the

process of terminating, this guest's execution. This means a SIGALRM may be

pending or imminent!

A slightly simpler model is to consider that a SIGALRM may arrive in the

future. This way, for correctness we only have to make sure we handle the

signal we can be sent! We know that we must return to the host, and the guest

fault that occurred is certainly more interesting than the guest termination,

so we would like to preserve that information. There is no information or

effect we want from the signal, so silence the alarm on KillState. This way,

if we recieve the possible SIGARLM, we know to ignore it.

An important question to ask is "How do we know the possible SIGARLM must be

ignored? Could it not be for another instance later on that thread?" The answer

is, in short, "We ensure it cannot!"

The SIGARLM is thread-directed, so to be an alarm for some other reason,

another instance would have to run and be terminated. To prevent this, we must

prevent another instance from running. Additionally, if a SIGALRM is in

flight, we need to stop and wait anyway. Since KillSwitch maintains a lock

on execution_domain as long as it's attempting to terminate a guest, we can

achieve both of these goals by taking, and immediately dropping, a lock on

execution_domain before descheduling an instance.

Even taking this lock is interesting:

- This lock could be taken while racing a

KillSwitch, after it has observed it may fire but before advancing to take this lock. - This lock could be taken while racing a

KillSwitch, after it has taken this lock. - This lock could be taken without racing a

KillSwitch.

In the first case, we've won a race on execution_domain, but there might be a

KillSwitch we're blocking with this. Disarm the KillSwitch by updating the

domain to Terminated, which reflects the fact that this instance has exited.

In the second case, descheduling until the KillSwitch has completed

termination. The domain will be Terminated, and updating to Terminated has

no effect. We simply use this lock to prevent continuting into the host until

an in-flight KillSwitch completes.

In the third case, we're not racing a KillSwitch, and any method of exiting

the guest will have disabled termination. No KillSwitch will observe a

changed execution_domain, so it's not incorrect to update it to Terminated.

Taken together, this ensures that descheduling an instance serializes in-flight

KillSwitch or prevents one from taking effect in a possibly-incorrect way, so

we know this race is handled correctly.

Terminated in hostcall fault

As promised, a note about what happens when a timeout occurs directly when a

hostcall faults! The instance's execution_domain must be Domain::Hostcall,

as it's a hostcall that faulted. The KillSwitch may or may not fire before

the signal handler disables termination. Even if it does fire, it will lock the

shared execution_domain and see Domain::Hostcall, where the domain will be

updated to Domain::Terminated. Since the hostcall will not resume,

end_hostcall will never see that the instance should stop, and no further

effect will be had; regardless of KillSwitch effect, the instance will exit

through the signal handler with a Faulted state. Additionally, because

faulted instances cannot be resumed, end_hostcall will never witness the

timeout.

[1]: For example, the code we would like to interrupt may hold locks, which we

can't necessarily guarantee drop. In a non-locking example, the host code could

be resizing a Vec shared outside that function, where interrupting the resize

could yield various forms of broken behavior.

lucet-wasi

lucet-wasi is a crate providing runtime support for the WebAssembly System Interface

(WASI). It can be used as a library to support WASI in another application, or

as an executable, lucet-wasi, to execute WASI programs compiled through lucetc.

Example WASI programs are in the examples directory.

Example

lucet-wasi example.so --dir .:. --max-heap-size 2GiB -- example_arg

Usage

lucet-wasi [OPTIONS] <lucet_module> [--] [guest_args]...

FLAGS:

-h, --help Prints help information

-V, --version Prints version information

OPTIONS:

--entrypoint <entrypoint> Entrypoint to run within the WASI module [default: _start]

--heap-address-space <heap_address_space_size>

Maximum heap address space size (must be a multiple of 4 KiB, and >= `max-heap-size`) [default: 8 GiB]

--max-heap-size <heap_memory_size>

Maximum heap size (must be a multiple of 4 KiB) [default: 4 GiB]

--dir <preopen_dirs>... A directory to provide to the WASI guest

--stack-size <stack_size>

Maximum stack size (must be a multiple of 4 KiB) [default: 8 MiB]

ARGS:

<lucet_module> Path to the `lucetc`-compiled WASI module

<guest_args>... Arguments to the WASI `main` function

Preopened files and directories

By default, WASI doesn't allow any access to the filesystem. Files and directories must be explicitly allowed by the host.

Instead of directly accessing the filesystem using paths, an instance will use inherited descriptors from pre-opened files.

This means that even the current directory cannot be accessed by a WebAssembly instance, unless this has been allowed with:

--dir .:.

This maps the current directory . as seen by the WebAssembly module to . as seen on the host.

Multiple --dir <wasm path>:<host path> arguments can be used in order to allow the instance to

access more paths.

Along with a preopened file/directory, WASI stores a set of capabilities. Lucet currently sets all the capabilities. In particular, once a directory has been preopened, its content as well as files from any of its subdirectories can be accessed as well.

Maximum heap size

--heap-address-space controls the maximum allowed heap size.

Usually, this should match the --reserved-size value given to lucetc.

Supported syscalls

We support the entire WASI API, with the exception of socket-related syscalls. These will be added when network access is standardized.

Thread safety

Lucet guests are currently single-threaded only. The WASI embedding assumes this, and so the syscall implementations are not thread-safe. This is not a fundamental limitation, should Lucet support multi-threaded guests in the future.

lucet-objdump

lucet-objdump is a Rust executable for inspecting the contents of a shared object generated by

lucetc.

Usage

lucet-objdump <lucetc-compiled-shared-object>

lucetc-objdump prints details about a shared object producted by lucetc:

- Required symbols

- Exported functions and symbols

- Imported functions and symbols

- Heap specification

- Globals specification

- Data segments

- Sparse page data

- Trap manifest

This can be useful for debugging purposes.

lucet-spectest

lucet-spectest is a Rust crate that uses lucetc and lucet-runtime, as well as the (external)

wabt crate, to run the official WebAssembly spec test suite, which is provided as a submodule in

this directory.

Lucet is not yet fully spec compliant, however reaching spec compliance is part of our project roadmap.

lucet-wasi-sdk

wasi-sdk is a Cranelift project that packages a build

of the Clang toolchain, the WASI reference sysroot, and a libc based on WASI syscalls.

lucet-wasi-sdk is a Rust crate that provides wrappers around these tools for building C programs

into Lucet modules. We use this crate to build test cases in lucet-runtime-tests and lucet-wasi.

lucet-module

lucet-module is a crate with data structure definitions and serialization functions that we emit

into shared objects with lucetc, and read with lucet-runtime.

Tests and benchmarks

Most of the crates in this repository have some form of unit tests. In addition,

lucet-runtime/lucet-runtime-tests defines a number of integration tests for the runtime, and

lucet-wasi has a number of integration tests using WASI C programs.

We also created the Sight Glass benchmarking tool to measure the runtime of C

code compiled through a standard native toolchain against the Lucet toolchain. It is provided as a

submodule at /sightglass.

Sightglass ships with a set of microbenchmarks called shootout. The scripts to build the shootout

tests with native and various versions of the Lucet toolchain are in /benchmarks/shootout.

Furthermore, there is a suite of benchmarks of various Lucet runtime functions, such as instance

creation and teardown, in /benchmarks/lucet-benchmarks.

Adding new tests for crates other than lucet-runtime or lucet-runtime-internals

Most of the crates in this repository are tested in the usual way, with a mix of unit tests defined alongside the modules they test, and integration tests defined in separate test targets.

Note that lucetc and lucet-wasi-sdk provide library interfaces for the Wasm compiler and the

C-to-Wasm cross compiler, respectively. You should prefer using these interfaces in a test to using

the command-line tools directly with std::process:Command, a Makefile, or a shell script.

Adding new tests for lucet-runtime or lucet-runtime-internals

While these crates have a similar mix of unit and integration tests, there are some additional complications to make sure the public interface is well-tested, and to allow reuse of tests across backends.

Public interface tests

The tests defined in lucet-runtime and lucet-runtime-tests are meant to exclusively test the

public interface exported from lucet-runtime. This is to ensure that the public interface is

sufficiently expressive to use all of the features we want to expose to users, and to catch linking

problems as early as possible.

While some tests in these crates use APIs exported only from lucet-runtime-internals, this is only

for test scaffolding or inspection of results. The parts of the test that are "what the user might

do" should only be defined in terms of lucet-runtime APIs.

Test reuse, regions, and macros

Many of the tests in the runtime require the use of a lucet_runtime::Region in order to create

Lucet instances. To enable reuse of these tests across multiple Region implementations, many tests

are defined in macros. For example, the unit tests defined in

/lucet-runtime/lucet-runtime-internals/src/alloc/tests.rs are parameterized by a TestRegion

argument, which is then applied using crate::region::mmap::MmapRegion. Likewise, many of the

integration tests in /lucet-runtime/lucet-runtime-tests are defined using macros that take a

TestRegion.

Most tests that use a Region should be defined within a macro like this. The exception is for

tests that are specific to a particular region implementation, likely using an API that is not part

of the Region trait. These tests should be defined alongside the implementation of the region (for

unit tests) or directly in a lucet-runtime test target (for integration tests).

Sight Glass

Sight Glass is a benchmark suite and tool to compare different implementations of the same primitives.

Usage

Sight Glass loads multiple shared libraries implementing the same test suite, runs all tests from all suites, and produces reports to evaluate how implementations compare to each other.

Functions from each library are evaluated as follows:

tests_config.global_setup(&global_ctx);

test1_setup(global_ctx, &test1_ctx);

test1_body(test1_ctx);

test1_teardown(test1_ctx);

test2_setup(global_ctx, &test2_ctx);

test2_body(test2_ctx);

test2_teardown(test2_ctx);

// ...

testN_setup(global_ctx, &testN_ctx);

testN_body(testN_ctx);

testN_teardown(testN_ctx);

tests_config.global_teardown(global_ctx);

Each shared library must export a tests_config symbol:

typedef struct TestsConfig {

void (*global_setup)(void **global_ctx_p);

void (*global_teardown)(void *global_ctx);

uint64_t version;

} TestsConfig;

TestsConfig tests_config;

global_setup and global_teardown are optional, and can be set to NULL if not required.

A test must at least export a function named <testname>_body:

void testname_body(void *ctx);

This function contains the actual code to be benchmarked.

By default, ctx will be set to the global_ctx. However, optional setup and teardown functions can also be provided for individual tests:

void testname_setup(void *global_ctx, void **ctx_p);

void testname_teardown(void *ctx);

See example/example.c for an example test suite.

Sightglass extracts all symbols matching the above convention to define and run the test suite.

Running multiple functions for a single test

A single test can evaluate multiple body functions sharing the same context.

These functions have to be named <testname>_body_<bodyname>.

<bodyname> can be anything; a numeric ID or a short description of the purpose of the function.

void testname_body_2(void *ctx);

void testname_body_randomized(void *ctx);

These functions are guaranteed to be evaluated according to their names sorted in lexical order.

Configuration

The global configuration is loaded from sightglass.toml file. This can be changed using the -c command-line flag.

The configuration lists implementations to be benchmarked:

test_suites = [

{ name = "test1", library_path = "implementation1.so" },

{ name = "test2", library_path = "implementation2.so" }

]

Individual test suites can also run a command in order to be optionally skipped if that command returns a non-zero exit code:

test_suites = [

{ name = "test1", library_path = "implementation1.so" },

{ name = "test2", library_path = "implementation2.so", guard = ["/opt/sg/guard-scripts/check", "arg1", "arg2"] }

]

Additional properties that the file can include:

-

single_core = <bool>: set totruein order to run the tests on a single CPU core, in order to get more accurate results. This only works on Linux. -

output = [ { format = "Text|CSV|JSON" [, file = <file>] [, breakdown = <bool>] } ... ]: how to store or display the results.

By defaut, the Text and CSV output do not include a breakdown of the time spent in individual functions for tests made of multiple functions.

This can be changed with the optional breakdown property being set to true.

The JSON output always includes this information.

Versioning and releasing to crates.io

This document describes how to appropriately decide the version of a new release, and the steps to actually get it published on crates.io.

Versioning

We release new versions of the Lucet crates all at once, keeping the versions in sync across crates. As a result, our adherence to semver is project-wide, rather than per-crate.

The versioning reflects the semantics of the public interface to Lucet. That is, any breaking change to the following crates requires a semver major version bump:

lucetclucet-objdumplucet-runtimelucet-validatelucet-wasilucet-wasi-sdk

For the other Lucet crates that are primarily meant for internal consumption, a breaking change does not inherently require a semver major version bump unless either:

- The changed interfaces are reexported as part of the public interface via the above crates, or

- The binary format of a compiled Lucet module is changed.

For example, a change to the type of Instance::run() would require a major

version bump, but a change to the type of InstanceInternal::alloc() would not.

Likewise, a change to a field on ModuleData would require a major version bump, as

it would change the serialized representation in a compiled Lucet module.

The release process

The release process for a normal (non-hotfix) release consists of several phases:

Preparing the release commit

Note This is a new practice since we've introduced the practice of -dev versions and the

changelog, and is expected to be refined as we get more experience with it.

-

Determine the version for the new release (see Versioning).

-

Create a new release branch based on the commit you want to eventually release. For example:

$ git checkout -b 0.5.2-release origin/main -

Replace the development version with the final version in the crates'

Cargo.tomlfiles. For example,0.5.2-devshould become0.5.2. Run the test suite in order to make sureCargo.lockis up to date. -

Edit

CHANGELOG.mdto add a new header with the version number and date of release. -

Commit, then open a pull request for the release and mark it with the DO NOT MERGE label.

-

Secure review and approval from the Lucet team for the pull request.

At this point, you should have a commit on your release branch that you are prepared to release to crates.io. Do not merge the pull request yet! Instead, proceed to release the crates.

Releasing to crates.io

Releasing a workspace full of interdependent crates can be challenging. Crates must be published in

the correct order, and any cyclic dependencies that might be introduced via [dev-dependencies]

must be broken. While there is interest in making this smoother, for now we have

to muddle through more manually.

-

Authenticate with

cargo loginusing a Github account with the appropriate access to the Lucet repository. You should only have to do this once per development environment. -

Ensure that you have the commit checked out that you would like to release.

-

Ensure that the version in all of the Lucet

Cargo.tomlfiles matches the version you expect to release. Between releases, the versions will end in-dev; if this is still the case, you'll need to replace this version with the appropriate version according to the guidelines above, likely through a PR. -

Edit

lucet-validate/Cargo.tomland make the following change (note the leading#):[dev-dependencies] -lucet-wasi-sdk = { path = "../lucet-wasi-sdk", version = "=0.5.2" } +#lucet-wasi-sdk = { path = "../lucet-wasi-sdk", version = "=0.5.2" } tempfile = "3.0"This breaks the only cycle that exists among the crates as of

0.5.1; if other cycles develop, you'll need to similarly break them by temporarily removing the dev dependency. -

Begin publishing the crates in a topological order by

cding to the each crate and runningcargo publish --allow-dirty(the tree should only be dirty due to the cycles broken above). While we would like to runcargo publish --dry-runbeforehand to ensure all of the crates will be successfully published, this will fail for any crates that depend on other Lucet crates, as the new versions will not yet be available to download.Do not worry too much about calculating the order ahead of time; if you get it wrong,

cargo publishwill tell you which crates need to be published before the one you tried. An order which worked for the0.5.1release was:lucet-modulelucet-validatelucetclucet-wasi-sdklucet-objdumplucet-runtime-macroslucet-runtime-internalslucet-runtime-testslucet-runtimelucet-wasi

It is unlikely but not impossible that a publish will fail in the middle of this process, leaving some of the crates published but not others. What to do next will depend on the situation; please consult with the Lucet team.

-

Ensure the new crates have been published by checking for matching version tags on the Lucet crates.

Congratulations, the new crates are now on crates.io! 🎉

Tagging and annotating the release in Git

-

Undo any changes in your local tree to break cycles.

-

Tag the release;

--signis optional but recommended if you have code signing configured:$ git tag --annotate --sign -m '0.5.2 crates.io release' 0.5.2 $ git push --tags -

Browse to this version's tag on the Github tags page, click Edit tag, and then paste this release's section of

CHANGELOG.mdinto the description. Enter a title like0.5.2 crates.io release, and then click Publish release.

Merging the release commit

-

Edit the versions in the repo once more, this time to the next patch development version. For example, if we just released

0.5.2, change the version to0.5.3-dev. -

Commit, remove the DO NOT MERGE tag from your release PR, and seek final approval from the Lucet team.

-

Merge the release PR, and make sure the release branch is deleted. The release tag will not be deleted, and will be the basis for any future hotfix releases that may be required.

Lucet security overview

This document provides a high-level summary of the security architecture of the Lucet project. It is meant to be used for orientation and a starting point for deploying a secure embedding of Lucet.

Security model

The Lucet project aims to provide support for secure execution of untrusted code. The project does not provide a complete secure sandbox framework at this time; security is achieved through a combination of Lucet-supplied security controls and user-supplied security controls.

At a high level, this jointly-constructed security architecture aims to prevent untrusted input, data, and activity from compromising the security of trusted components. It also aims to prevent an untrusted actor from compromising the security (e.g. data and activity) of another untrusted actor. For example, one user of a Lucet embedding should not be able to affect the security of another user of the same Lucet embedding.

Some security requirements for the Lucet project have not been implemented yet. See the remainder of this document as well as project Github issues for more information. Note that even when Lucet project security goals have been met, overall system security requirements will vary by embedding.

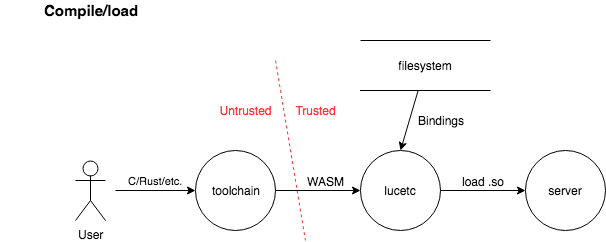

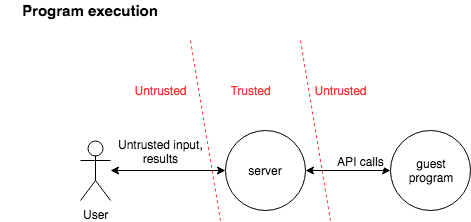

The Lucet security model can be summarized via two simplified execution scenarios: compiling/loading of sandboxed guest code and execution of untrusted guest programs. These scenarios are described in terms of the following levels.

- Trusted: refers to code, processes, or inputs that are fully trusted and generally controlled by the administrator of a system that runs or embeds Lucet components.

- Untrusted: refers to code, processes, or inputs that are completely untrusted and generally supplied by a third party. For example, user-supplied Wasm code is untrusted.

The scenarios are modeled as simplified data flow diagrams below. draw.io diagram source files are available here.

Compile/load scenario

In the compile/load scenario, a user provides untrusted WebAssembly code to the lucetc compiler. The lucetc compiler consumes this code along with trusted bindings and produces a shared object file. A trusted application (e.g. server) that embeds lucet-runtime then loads the guest program.

Program execution scenario

In the program execution scenario, an untrusted third party end-user sends data to a trusted server that has loaded a guest program (via the compile/load scenario above). The trusted server handles this data and passes it to an instance of the untrusted guest program for processing. The guest program may call into trusted server APIs to perform privileged processing, such as further communication with the end-user, untrusted network endpoints, etc. before execution terminates.

Security requirements

This section summarizes salient security requirements for the Lucet projects in terms of high-level attack scenarios. As mentioned above, Lucet does not provide a complete secure sandbox framework at this time; security is achieved through a combination of Lucet-supplied security controls and user-supplied security controls.

Attacks against compilation process

An attacker may be able to supply a malicious input file to the lucetc compiler toolchain in the context of the “compile/load” scenario above, with a goal of compromising lucetc and/or the host system it is executing within.

Lucet is designed to prevent elevation of privilege attacks and against the lucetc compiler toolchain. Due to the nature of WebAssembly application, upstream components of the lucetc compiler (particularly Cranelift) generally have a similar design goals in this respect, and have corresponding security measures in place. The Lucet project has undergone an initial security assessment.

Bugs in lucetc that can lead to information leaks, elevation of privilege (e.g. arbitrary remote code execution) and otherwise compromise security attributes are considered security vulnerabilities in the context of the Lucet project.

Attack vectors stemming from asymmetric consumption of resources inherent in compilation processes, for example consumption of CPU or memory for large or complex inputs, should be addressed by user/administrator via environmental controls or similar. For example, a lucetc deployment could limit input size earlier in the processing flow, include cgroup runtime controls, etc.

Note that an evolving compiler toolchain like lucetc presents a rich attack surface that will likely require ongoing patching of vulnerabilities. It is highly recommended that additional protections common classes of attacks be deployed by administrators for defense-in-depth. For example, the terrarium project runs lucetc compilation jobs in minimal, single-use, security-hardened containers in an isolated environment subject to runtime security monitoring.

Guest-to-host attacks

An attacker can supply malicious guest code to a Lucet embedding. Bugs in lucetc, lucet-runtime, or any other project components that allow code generated by an attacker to elevate privileges against the embedding host, crash the host, leak host data, or otherwise compromise the host’s security are considered security vulnerabilities. Correspondingly, bugs in Lucet that compromise of security policies of system components (e.g. WASI capabilities policies) are considered security vulnerabilities.

Lucet leverages WebAssembly semantics, control flow, and operational memory isolation models to prevent broad classes of attacks against the host embedding (see the WebAssembly docs for details). Specifically, Lucet provides WebAssembly-based mechanisms for isolating most faults to a specific instance of guest program; in these cases mitigations can be applied (e.g. alerting, guest banning, etc.) and execution of the host process can continue unabated. Lucet is compatible with the WebAssembly System Interface (WASI) API for system interfaces, which supplies a capabilities-based security model for system resource access. Lucet is designed to provide a baseline for integration with additional host sandboxing technologies, such as seccomp-bpf.

Host function call bindings supplied by the Lucet user/administrator are analogous to WebAssembly imported functions. Lucet project components aim to generate code that provides ABI-level consistency checking of function call arguments (work in progress), but vulnerabilities explicitly defined in host-side functionality supplied by Lucet administrators (e.g. memory corruption in an embedding server’s C code) is considered out-of-scope for the Lucet project.

Caveats

- Lucet does not provide complete protection against transient/speculative execution attacks against the host. Efforts are underway in

lucetcand upstream projects to supply industry-standard protections to generated native code, but Lucet users/administrators must deploy additional defenses, such as protecting imported function APIs from speculative execution, applying privilege separation, site isolation, sandboxing technology and so on. - Support for automated ABI-level consistency checking of function call arguments is not complete. In the meantime, Lucet users/administrators must implement this checking.

- Lucet is a new technology and under active development. Designers and architects should plan to monitor releases and regularly patch Lucet to benefit from remediation of security vulnerabilities.

Guest-to-guest attacks

This scenario is similar to the previous one, except an attacker is targeting another guest. Similarly, bugs in lucetc, lucet-runtime, or any other project components that allow code generated by an attacker to leak data of other guest or other compromise the security of other guests are considered vulnerabilities.

The protections, responsibilities, and caveats defined in the previous section apply to this attack scenario as well.

Attacks against guest programs

An attacker may attempt to exploit a victim guest program that is executing in a Lucet host embedding. Lucet provides WebAssembly-based security guarantees for guest programs, but WebAssembly programs may still be vulnerable to exploitation. For example, memory allocated within a linear memory region may not have conventional protections in place, type confusion or other basic memory corruption vulnerabilities that are not obviated by WebAssembly may be present in guest programs, and so on. It is the Lucet administrator’s responsibility to protect vulnerable guest program logic beyond WebAssembly-provided safety measures.

Report a security issue

See the project's SECURITY.md

Changelog

Unreleased

-

Added

install_lucet_signal_handler()andremove_lucet_signal_handler(), along withInstance::ensure_signal_handler_installed()andInstance::ensure_sigstack_installed()options to control the automatic installation and removal of signal handlers and alternate signal stacks. The default behaviors have not changed. -

Added

Instance::run_start()to the public API, which runs the Wasm start function if it is present in that instance's Wasm module. It does nothing if there is no start function.Creating or resetting an instance no longer implicitly runs the start function. Embedders must ensure that

run_start()is called before calling any other exported functions.Instance::run()will now returnErr(Error::InstanceNeedsStart)if the start function is present but hasn't been run since the instance was created or reset. -

Encoded the Wasm start function in Lucet module metadata, rather than as a specially-named symbol in the shared object. This reduces contention from

dlsymoperations when multiple threads run Lucet instances concurrently. -

Upgraded the

libloadingdependency, allowing for more specific error messages from dynamic loading operations. -

Corrected a race condition where a

KillSwitchfired while lucet-runtime is handling a guest fault could result in a SIGALRM or panic in the Lucet embedder. -

Converted the

&mut Vmctxargument to hostcalls into&Vmctx. Additionally, allVmctxmethods now take&self, where some methods such asyieldpreviously took&mut self. These methods still require that no other outstanding borrows (such as the heap view) are held across them, but that property is checked dynamically rather than at compile time.

0.6.1 (2020-02-18)

-

Added metadata to compiled modules that record whether instruction counting instrumentation is present.

-

Made

lucetcmore flexible in its interpretation of theLDenvironment variable. It now accepts a space-separated set of tokens; the first token specifies the program to invoke, and the remaining tokens specifying arguments to be passed to that program. Thanks, @froydnj! -

Added public

LucetcOptmethods to configure thecanonicalize_nanssetting. Thanks, @roman-kashitsyn! -

Fixed

lucet-runtime's use of CPUID to not look for extended features unless required by the module being loaded, avoiding a failure on older CPUs where that CPUID leaf is not present. Thanks, @shravanrn!

0.6.0 (2020-02-05)

-

Added

free_slots(),used_slots(), andcapacity()methods to theRegiontrait. -

Added a check to ensure the

Limitssignal stack size is at leastMINSIGSTKSZ, and increased the default signal stack size on macOS debug builds to fit this constraint. -

Added an option to canonicalize NaNs to the

lucetcAPI. Thanks, @DavidM-D! -

Restored some of the verbosity of pretty-printed errors in

lucetcandlucet-validate, with more on the way. -

Fixed OS detection for LDFLAGS on macOS. Thanks, @roman-kashitsyn!

0.5.1 (2020-01-24)

- Fixed a memory corruption bug that could arise in certain runtime configurations. (PR) (RustSec advisory)

0.5.0 (2020-01-24)

-

Lucet officially became a project of the Bytecode Alliance 🎉.

-

Integrated

wasi-commonas the underlying implementation for WASI inlucet-wasi. -

Updated to Cranelift to version 0.51.0.

-

Fixed a soundness bug by changing the types of the

Vmctx::yield*()methods to require exclusive&mut selfaccess to theVmctx. This prevents resources like embedder contexts or heap views from living across yield points, which is important for safety since the host can modify the data underlying those resources while the instance is suspended. -

Added the

#[lucet_hostcall]attribute to replacelucet_hostcalls!, which is now deprecated. -

Added the ability to specify an alignment for the base of a

MmapRegion-backed instance's heap. Thanks, @shravanrn! -

Added a

--targetoption tolucetcto allow cross-compilation to other architectures than the host's. Thanks, @froydnj! -

Changed the Cargo dependencies between Lucet crates to be exact (e.g.,

"=0.5.0"rather than"0.5.0") rather than allowing semver differences. -

Fixed the

KillSwitchtype not being exported from the public API, despite being usable viaInstance::kill_switch(). -

Improved the formatting of error messages.

-

Ensured the

lucet-wasiexecutable properly links in the exported symbols fromlucet-runtime.

0.4.3 (2020-01-24)

- Backported the fix for a memory corruption bug that could arise in certain runtime configurations. (PR) (RustSec advisory)

Apache License

Version 2.0, January 2004

http://www.apache.org/licenses/

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

1. Definitions.

"License" shall mean the terms and conditions for use, reproduction,

and distribution as defined by Sections 1 through 9 of this document.

"Licensor" shall mean the copyright owner or entity authorized by

the copyright owner that is granting the License.

"Legal Entity" shall mean the union of the acting entity and all

other entities that control, are controlled by, or are under common

control with that entity. For the purposes of this definition,

"control" means (i) the power, direct or indirect, to cause the

direction or management of such entity, whether by contract or

otherwise, or (ii) ownership of fifty percent (50%) or more of the

outstanding shares, or (iii) beneficial ownership of such entity.

"You" (or "Your") shall mean an individual or Legal Entity

exercising permissions granted by this License.

"Source" form shall mean the preferred form for making modifications,

including but not limited to software source code, documentation

source, and configuration files.

"Object" form shall mean any form resulting from mechanical

transformation or translation of a Source form, including but

not limited to compiled object code, generated documentation,

and conversions to other media types.

"Work" shall mean the work of authorship, whether in Source or

Object form, made available under the License, as indicated by a

copyright notice that is included in or attached to the work

(an example is provided in the Appendix below).

"Derivative Works" shall mean any work, whether in Source or Object

form, that is based on (or derived from) the Work and for which the

editorial revisions, annotations, elaborations, or other modifications

represent, as a whole, an original work of authorship. For the purposes

of this License, Derivative Works shall not include works that remain

separable from, or merely link (or bind by name) to the interfaces of,

the Work and Derivative Works thereof.

"Contribution" shall mean any work of authorship, including

the original version of the Work and any modifications or additions

to that Work or Derivative Works thereof, that is intentionally

submitted to Licensor for inclusion in the Work by the copyright owner

or by an individual or Legal Entity authorized to submit on behalf of

the copyright owner. For the purposes of this definition, "submitted"

means any form of electronic, verbal, or written communication sent

to the Licensor or its representatives, including but not limited to

communication on electronic mailing lists, source code control systems,

and issue tracking systems that are managed by, or on behalf of, the

Licensor for the purpose of discussing and improving the Work, but

excluding communication that is conspicuously marked or otherwise

designated in writing by the copyright owner as "Not a Contribution."

"Contributor" shall mean Licensor and any individual or Legal Entity

on behalf of whom a Contribution has been received by Licensor and

subsequently incorporated within the Work.

2. Grant of Copyright License. Subject to the terms and conditions of

this License, each Contributor hereby grants to You a perpetual,

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

copyright license to reproduce, prepare Derivative Works of,

publicly display, publicly perform, sublicense, and distribute the

Work and such Derivative Works in Source or Object form.

3. Grant of Patent License. Subject to the terms and conditions of

this License, each Contributor hereby grants to You a perpetual,

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

(except as stated in this section) patent license to make, have made,

use, offer to sell, sell, import, and otherwise transfer the Work,

where such license applies only to those patent claims licensable

by such Contributor that are necessarily infringed by their

Contribution(s) alone or by combination of their Contribution(s)

with the Work to which such Contribution(s) was submitted. If You

institute patent litigation against any entity (including a

cross-claim or counterclaim in a lawsuit) alleging that the Work